NVIDIA’s 10-Minute Miracle: Did Months of AI Training Just Become Obsolete?

NVIDIA's latest platform has fundamentally altered the timeline for large-scale AI, turning marathon training sessions into a 10-minute sprint.

Forget the weeks and months. The new benchmark for training a world-class, large-scale AI model is now just 10 minutes. This isn’t a typo; it's a tectonic shift. NVIDIA has just demonstrated a feat of computational power that calls the entire timeline—and perhaps the entire infrastructure—of modern AI development into question.

For years, the greatest barrier to AI progress wasn't ideas; it was time. The "training run," the process of feeding a model mountains of data, was a marathon measured in months and costing millions in compute power. Now, NVIDIA has effectively turned it into a 100-meter dash.

What Just Happened? The 10-Minute AI Model

In the latest round of the official MLPerf Training v5.1 benchmarks—the Olympics for AI hardware—NVIDIA didn't just win. It lapped the competition, and then lapped its own previous records. The show-stopping result:

Training the colossal, 405-billion parameter Llama 3.1 model—a generative AI on the scale of those powering ChatGPT and others—was completed in 10 minutes and 17 seconds flat.

This was achieved using a massive cluster of 5,120 of its next-generation Blackwell-architecture GPUs. To put this in perspective, a similar task on previous-generation hardware (like the dominant H100) would have taken exponentially longer, and just a few years ago, it was considered a multi-month endeavor reserved for only the largest tech giants.

So, Is the 'Months-Long' Training Model Obsolete?

This is the critical question for every developer, CTO, and AI startup. The answer is a nuanced "Yes, and no."

For the Titans: Yes, It's Over.

For hyperscalers like Google, Meta, Microsoft, and OpenAI, the "months-long" training run is now officially a relic. The bar has been reset. The ability to iterate on a massive new model *before you finish your coffee* changes the game entirely. It’s no longer about one perfect run; it's about hundreds of experimental runs. This accelerates the R&D cycle from a crawl to a full-blown sprint, widening the gap between those who have Blackwell and those who do not.

For Everyone Else: The Shockwave Is Coming

For a startup or a university research lab, you can't afford a 5,000-GPU cluster. So, no, your H100s or A100s aren't obsolete *today*. But this 10-minute miracle is a terrifying preview of the future. The capabilities of these top-tier "AI factories" will quickly trickle down. The models they create in minutes will become the open-source benchmarks you have to compete against. This demonstration proves that the computational gap is widening, and the pressure to access next-gen hardware is now critical for survival.

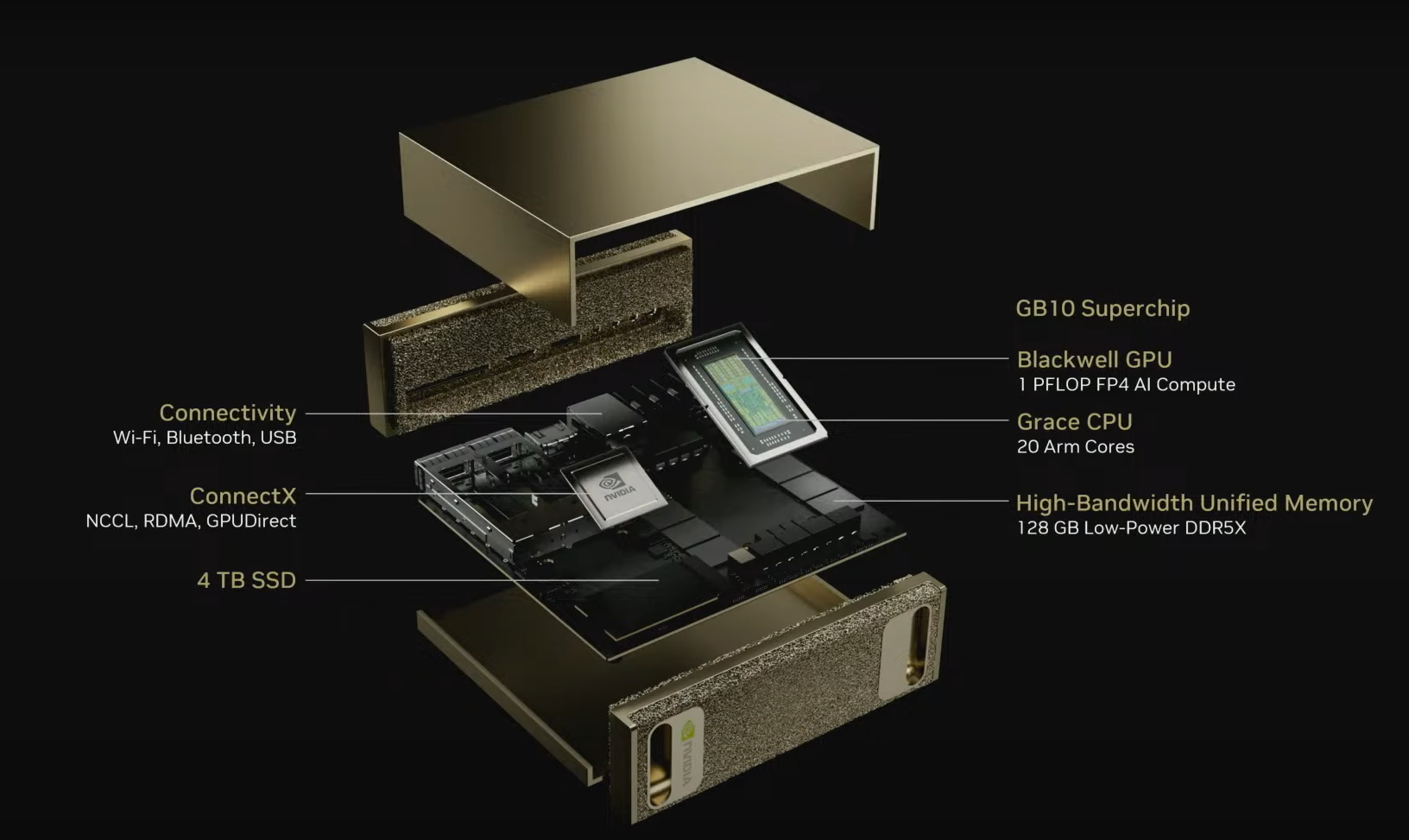

The 'Miracle' Worker: It's Not Just a Chip, It's the Factory

It's tempting to credit the Blackwell Ultra GPU alone, but that's missing the point. This 10-minute record wasn't set by a single card; it was set by an entire *platform* working in perfect unison.

- Massive Scale: This required over 5,000 GPUs working as one.

- NVLink & InfiniBand: The high-speed interconnects that link the GPUs are just as important as the chips themselves, eliminating bottlenecks that would have crippled previous systems.

- Full-Stack Optimization: NVIDIA's CUDA software layer ensures that every single cycle of compute power is used efficiently.

This is what NVIDIA means when it talks about "AI Factories." They aren't just selling chips; they're selling the entire assembly line, pre-tuned for maximum speed.

The Bottom Line: The Gap Just Collapsed

While your personal AI training setup isn't "obsolete" overnight, the *paradigm* of AI development is. The "10-Minute Miracle" proves that the primary bottleneck in AI is no longer computation time; it's the speed of human ideas. The gap between a "what if" hypothesis and a fully-trained, massive AI model has just collapsed from months to minutes.

The question is no longer "How long will it take to build?" The question is now, "What can you build by lunch?"